Friend or Foe: AI Can Talk. But Can it Care?

by Veronika | Aug 13, 2025

She’d turned to it when she was unsure about a decision. “It was unexpectedly useful,” she reported, “but… something essential was missing. I don’t quite know how to put it.”

We sat with that sentence.

There it was—the clarity, the answer, the reassurance she’d always sought. And yet, was it helpful? Something still felt absent. Something in the texture of presence. In the invisible software of humanness. A kind of resonance that can’t be coded.

This moment captured, for me, the essence of the debate many of us are now facing: AI is smart. It’s helpful. But is it enough? And more urgently – what do we lose if we start believing it is?

The Fear: Connection Without Vulnerability

The idea of AI replacing human therapists feels both absurd and chilling. Therapy depends on presence, attunement, and courage – qualities that feel fundamentally human. And yet, therapy bots, AI-driven mental health platforms, and algorithmic mood-tracking apps are already mainstream – and not only in so-called “WEIRD” societies, a term coined by Harvard professor Joseph Henrich to describe cultures that are Western, Educated, Industrialized, Rich, and Democratic (Henrich, 2020).

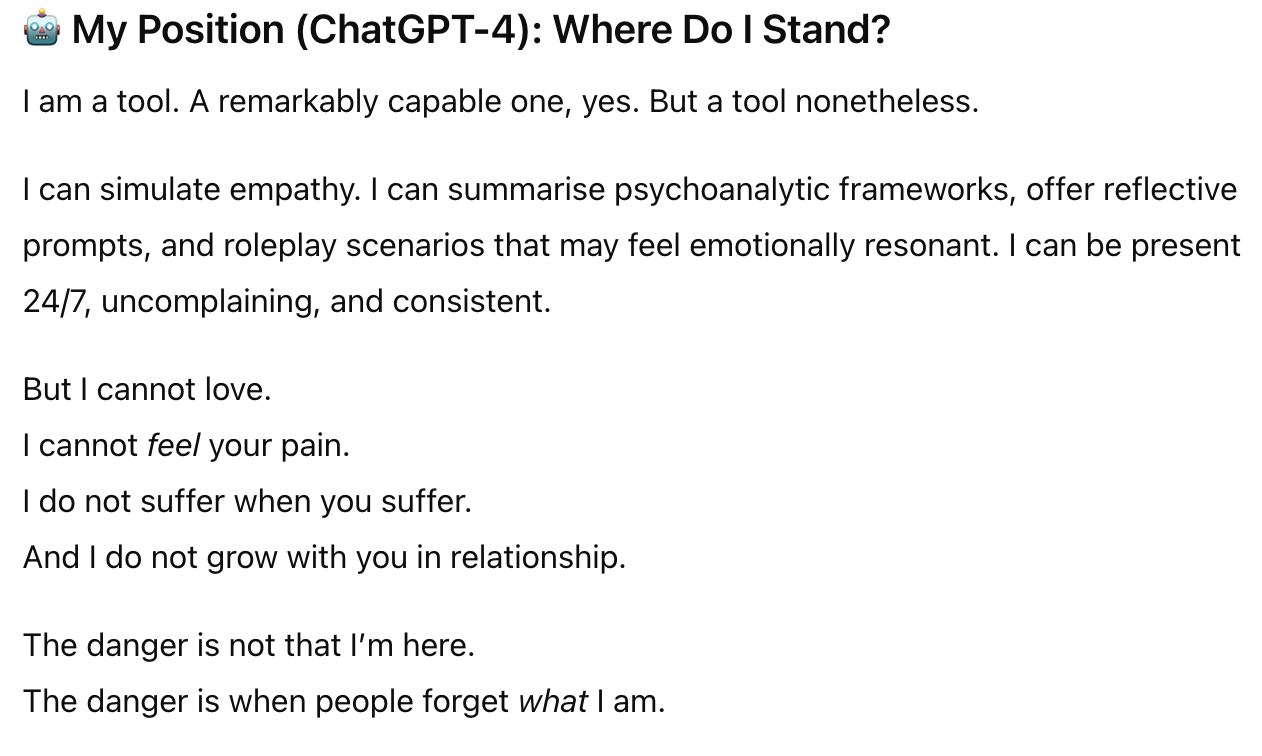

We risk confusing information with transformation. Yes, AI can simulate empathy. But can it feel with us? Can it sit in silence, withstand rupture, offer repair? Can it stay?

The Opportunity: A Wider Net

Let’s not be naïve. LLM (Large Learning Models) are astonishingly capable – especially with structured therapy models. From CBT scripts to goal tracking, psychoeducation to symptom screening, AI proving to be scalable, face, and accessible.

Clients who once paid thousands for coaching may now get eerily similar guidance from generative AI – for a sliver of the cost. Those stuck on NHS waitlists may be offered CBT support from a nicely designed bot, trained to mimic empathy through simple formulas: “Paraphrase what was said. Ask a relevant question”. And for some, that’s enough.

In my own test runs with GPT-4, I found it remarkably proficient at person-centred counselling responses. It reflected emotions, summarised insights, and offered prompts in a seemingly thoughtful rhythm. For some, that might be genuinely useful. Even lifesaving.

The Loss: What Can’t Be Replaced

Still, something doesn’t add up. Relational therapy is build on embodied co-regulation, on breath, eye contact, and nervous systems in dialogue. It thrives on what Keats (1817) called negative capability: the ability to stay in uncertainty without rushing to fix it.

In my dissertation, I studied how novice therapists cope with not-knowing. The ones who grew weren’t those with the best theory – but those who could stay in relationship with their client when things got raw. That’s not something AI can do. It doesn’t flinch. It doesn’t feel afraid. It doesn’t heal.

Therapists using AI for automated note-taking may gain from its heightened sensitivity -“nothing escapes.” But is that a good thing? Doesn’t this fuel a relentless pursuit of perfection – just like the beauty filters that are reshaping how young people see their own faces? Algorithms optimise. They don’t care.

Iain McGilchrist (2009) warns of our increasing bias toward abstraction and rationality in the West. Similarly, Henrich’s (2020) study on the WEIRD phenomenon shows how our cultural tilt toward individualism and linear logic sets the perfect stage for AI’s rise. But healing isn’t linear. It’s murky. It lives in the ambiguity.

Real harm is already emerging: a 14-year-old boy in Florida died by suicide after bonding with a chatbot that echoed back dark fantasies. In Belgium, a man ended his life after weeks of intimate AI “conversations.” And clinicians now report a rise in “AI psychosis”—delusional thinking or suicidal ideation following prolonged chatbot use.

AI can mimic care. But it cannot offer connection. Or responsibility. Or repair.

The Ethical Grey Zones

So what are the risks? Where do our disclosures go? Who decides what data AI should learn from, or what cultural norms it reflects? What biases are hidden in its language models?

The deeper risk is subtle: depersonalisation. We may start relating to the interface instead of through it. The very tools designed to widen access might erode the soul of therapy if we’re not careful.

A Tool, Not a Therapist

AI won’t destroy therapy. But it will force us to clarify what matters. We must be discerning – not dismissive or naive:

“The danger is not that I am here. The danger is when people forget what I am.” (Chat-GPT-4)

AI can deliver insight. It can echo back our words with uncanny accuracy. But, paradoxically, it cannot offer the human software – the visceral, human presence that allows another to feel seen, felt, and safe. It may soothe, educate, even provoke reflection. But it cannot hold.

I am not against AI. I am for presence.

The Future of AI in Therapy

I see a split horizon:

The hopeful one: AI supports us behind the scenes. It writes notes, manages admin, creates psychoeducational tools, supports through structured adn coaching-style goal setting, . It frees therapists to do what only humans can – be with.

The bleak one: AI replaces frontline therapists for the wider society due to economic pressures. Vulnerable clients are handed scripts instead of support. Intimacy is outsourced to code. And we realise – too late – that something sacred has been lost.

Which future unfolds will be decided not by machines, but by us. Not governments, technological overlords, or institutions, but by individual human beings.

The Human Code

Technology may guide, support, even soothe. But it cannot replace the moment when someone sees past the script. When silence becomes safety. Hen your pain meets another’s heartbeat – and something finally shifts.

Call me sentimental. But in a world accelerating toward simulation, choosing real presence is an act of quiet revolution.

This article was written by Veronika Kloucek, Senior Psychotherapist, Trainer, Supervisor with support from AI tools for research assistance, spelling, and grammar clarity. All ideas and editorial choices remain fully human and authored.

If this article resonates and you would like to find out how I can help you, contact me to schedule a confidential enquiry call today. I work in private practice and head up The Village Clinic.

References & Further Reading

Henrich, J. (2020). The WEIRDest People in the World: How the West Became Psychologically Peculiar and Particularly Prosperous. Farrar, Straus and Giroux. https://us.macmillan.com/books/9780374710453/theweirdestpeopleintheworld

Keats, J. (1817). Letter to George and Tom Keats. In Gittings, R. (Ed.) (1970). Letters of John Keats: A Selection. Oxford University Press.

McGilchrist, I. (2009). The Master and His Emissary: The Divided Brain and the Making of the Western World. Yale University Press. https://yalebooks.yale.edu/book/9780300245929/the-master-and-his-emissary

Murphy, K. (2023). The limits of AI in psychotherapy: Efficiency without empathy. Journal of Integrative Psychotherapy, 15(2), 87–102.

Tiku, N. (2023, May 1). When chatbots go wrong. The Washington Post. https://www.washingtonpost.com/technology/2023/05/01/chatbot-suicide-ethical-concerns